In this post we are going to learn how to scan luns from host side. In many of the interviews it is a common question, "Explain me step by step how do you make a LUN visible at host side?"

Let me make it clear what exactly Host side scanning means. Whenever Sys Admins request for new luns, Storage team will assign the LUNs as per the request. Then it is an admin task to make it visible at OS level for the application use.

To make a LUN ready to use and to make it visible at host side we need to follow few steps carefully. When it comes to LUNs visibility and Storage, iSCSI target and iSCSI initiator are the most common terms we come across.

iSCSI initiator initiates a SCSI session, that means it requests the LUN (our Host server).

iSCSI target is the Storage Network, that means it is the server which contains the LUNs (Target Node -- OpenFiler).

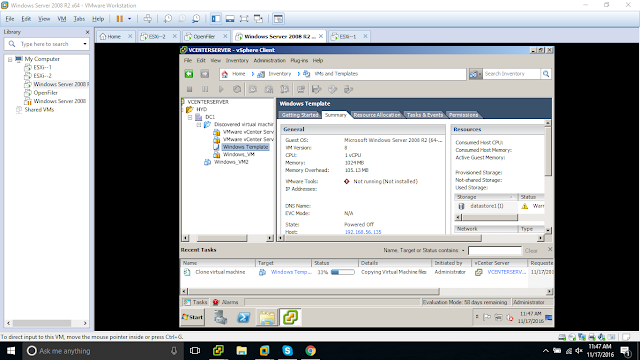

In my scenario, I created 4 Luns in my OpenFiler storage. Now I will show you the steps to make them visible at my Solaris host.

Steps we need to follow,

1. Check whether iscsitgt service is online or not. (Make it online)

2. Using "iscsiadm" command, we need to add our iSCSI target to our host.

3. Then comes the actual host side scanning using "devfsadm" command.

Output of format command before assigning luns...

bash-3.2# echo| format

Searching for disks...

AVAILABLE DISK SELECTIONS:

0. c0d0 <▒x▒▒▒▒▒▒▒▒▒@▒▒▒ cyl 2085 alt 2 hd 255 sec 63>

/pci@0,0/pci-ide@1,1/ide@0/cmdk@0,0

Specify disk (enter its number): Specify disk (enter its number):

bash-3.2#

Let me make it clear what exactly Host side scanning means. Whenever Sys Admins request for new luns, Storage team will assign the LUNs as per the request. Then it is an admin task to make it visible at OS level for the application use.

To make a LUN ready to use and to make it visible at host side we need to follow few steps carefully. When it comes to LUNs visibility and Storage, iSCSI target and iSCSI initiator are the most common terms we come across.

iSCSI initiator initiates a SCSI session, that means it requests the LUN (our Host server).

iSCSI target is the Storage Network, that means it is the server which contains the LUNs (Target Node -- OpenFiler).

In my scenario, I created 4 Luns in my OpenFiler storage. Now I will show you the steps to make them visible at my Solaris host.

Steps we need to follow,

1. Check whether iscsitgt service is online or not. (Make it online)

2. Using "iscsiadm" command, we need to add our iSCSI target to our host.

3. Then comes the actual host side scanning using "devfsadm" command.

Output of format command before assigning luns...

bash-3.2# echo| format

Searching for disks...

AVAILABLE DISK SELECTIONS:

0. c0d0 <▒x▒▒▒▒▒▒▒▒▒@▒▒▒ cyl 2085 alt 2 hd 255 sec 63>

/pci@0,0/pci-ide@1,1/ide@0/cmdk@0,0

Specify disk (enter its number): Specify disk (enter its number):

bash-3.2#

Proceed with our steps,

bash-3.2# svcs iscsitgt

STATE STIME FMRI

disabled 15:12:02 svc:/system/iscsitgt:default

bash-3.2#

bash-3.2# svcadm enable iscsitgt

bash-3.2#

bash-3.2# svcs iscsitgt

STATE STIME FMRI

online 17:34:06 svc:/system/iscsitgt:default

bash-3.2#

Then the Step 2,

bash-3.2# iscsiadm list static-config

bash-3.2# ---- No targets are available.

Add the iSCSI target, (Careful with the name, when you have many available targets at Storage end)

bash-3.2# iscsiadm add static-config iqn.2006-01.com.openfiler:tsn.d896e2cf3975,10.0.0.129:3260

bash-3.2# --- Port 3260 is a TCP port for iSCSI traffic.

bash-3.2# iscsiadm list static-config

Static Configuration Target: iqn.2006-01.com.openfiler:tsn.d896e2cf3975,10.0.0.129:3260

bash-3.2#

Then the final step, scanning disks at host side....

bash-3.2# devfsadm -i iscsi

bash-3.2#

bash-3.2#

bash-3.2# echo|format

Searching for disks...

AVAILABLE DISK SELECTIONS:

0. c0d0 <▒x▒▒▒▒▒▒▒▒▒@▒▒▒ cyl 2085 alt 2 hd 255 sec 63>

/pci@0,0/pci-ide@1,1/ide@0/cmdk@0,0

1. c2t2d0 <OPNFILE-VIRTUAL-DISK -0 cyl 1529 alt 2 hd 128 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Atsn.d896e2cf39750001,0

2. c2t3d0 <OPNFILE-VIRTUAL-DISK -0 cyl 2043 alt 2 hd 128 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Atsn.d896e2cf39750001,1

3. c2t4d0 <OPNFILE-VIRTUAL-DISK -0 cyl 1017 alt 2 hd 64 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Atsn.d896e2cf39750001,2

4. c2t5d0 <OPNFILE-VIRTUAL-DISK -0 cyl 505 alt 2 hd 64 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Atsn.d896e2cf39750001,3

Specify disk (enter its number): Specify disk (enter its number):

bash-3.2#

If we have EMC storage in our environment, then there are other commands to deal with powerpath to scan the luns. Similarly to make use of these luns under Veritas control, we use vxscandisks command to scan....

Regarding above scenarios, I already posted earlier...

https://solarishandy.blogspot.com/2015/02/making-luns-visible-at-host-side.html

#####################################################################################